Most organizations struggle with messy data files. Excel spreadsheets arrive with scattered date formats, merged cells, and calculations mixed in with raw data. These issues are common across industries.

This guide covers everything needed to tidy data and organize tables properly. Following these practices will reduce time spent wrestling with messy datasets and improve data quality across teams.

What Exactly Is “Tidy Data”? (And Why It Matters)

Tidy data can be compared to a well organized filing cabinet. Everything has its designated place, and information can be found instantly.

Tidy data follows three fundamental rules:

- Each variable gets its own column (separate folders for different topics)

- Each observation gets its own row (one document per folder slot)

- Each value gets its own cell (one piece of information per document)

Here’s what this looks like in practice:

Messy Data Example:

Country | 1999_cases | 1999_pop | 2000_cases | 2000_pop

-----------|------------|-----------|------------|----------

Afghanistan| 745 | 19987071 | 2666 | 20595360

Brazil | 37737 | 172006362 | 80488 | 174504898Tidy Data Example:

Country | Year | Cases | Population

------------|------|-------|----------

Afghanistan | 1999 | 745 | 19987071

Afghanistan | 2000 | 2666 | 20595360

Brazil | 1999 | 37737 | 172006362

Brazil | 2000 | 80488 | 174504898In the tidy version, each column represents one variable and each row represents one observation.

Benefits of Tidy Data Organization

When data is properly structured, several advantages emerge:

- Analysis tools function correctly (fewer error messages)

- Patterns become visible (trends are easier to identify)

- Data sharing becomes seamless (colleagues can work with files immediately)

- Automated processing becomes possible (workflows can be streamlined)

Core Principles of Data Organization

Principle One: Maintain Consistency

Consistency should be applied to every aspect of data organization:

Inconsistent Data Example:

Patient_ID | Gender | Blood_Type

-----------|--------|----------

001 | M | A+

002 | Male | B-

003 | m | O

004 | MALE | AB+Consistent Data Example:

Patient_ID | Gender | Blood_Type

-----------|--------|----------

001 | Male | A+

002 | Male | B-

003 | Male | O

004 | Male | AB+Consistency applies to:

- Missing values: Choose “NA” or ”-” and use consistently throughout

- Dates: Always use YYYY-MM-DD format

- File names: Establish a naming convention and follow it strictly

Date format inconsistencies are particularly problematic. When dates appear as “1/2/2023”, “Jan 2 2023”, “01-02-23”, and “2/1/23” in the same dataset, analysis becomes significantly more complex.

Principle Two: Create Descriptive Variable Names

Effective variable names function like clear street signs, providing immediate understanding of content.

Poor Variable Names:

temp(temperature of what? maximum? minimum?)data1(provides no information)Patient Weight (kg)(spaces cause software issues)$$$Sales$$$(special characters create problems)

Effective Variable Names:

max_temperature_celsiuspatient_weight_kgmonthly_sales_revenuesurvey_completion_date

Variable names should be understandable months after creation without additional context.

Principle Three: One Value Per Cell

Each cell should contain exactly one piece of information.

Multiple Values in Cells (Problematic):

Location | Notes

------------------|-------------------------

Room 204, Bldg A | Temperature: 72°F, Good

Lab B-15 | Temp: 68°F, Needs repairSeparated Information (Correct):

Building | Room | Temperature_F | Condition | Notes

---------|------|---------------|-----------|-------

A | 204 | 72 | Good | None

B | 15 | 68 | Poor | Needs repairCommon Issues to Avoid:

- Units mixed with data values (“45 kg” instead of “45” with units in column names)

- Notes combined with data values (“0 (below detection limit)” requires separate note columns)

- Merged cells for visual formatting (this disrupts data structure)

Rectangular Data Structure Requirements

Data should be organized in rectangular format. This means no unusual shapes, scattered information, or artistic layouts.

The One Sheet, One Table Rule

Spreadsheets don’t enforce a schema, so the same variable can drift from column F to H without anyone noticing. This creates major problems for automated processing.

Follow these guidelines:

- Put one clean header row on top and keep a single block of data per sheet

- Avoid side by side mini tables and stacked headers

- If you have two tables, make two sheets or two files

- Keep one continuous block with no merged cells, no floating totals, no footers inside the data area

Non-Rectangular Layout (Problematic):

PATIENT SURVEY RESULTS

Patient: John Doe Age: 45

Survey Date: 2024-01-15 Location: Clinic A

Question 1: How do you feel? Answer: Good

Question 2: Pain level? Answer: 3/10

Question 3: Sleep quality? Answer: Fair

Patient: Jane Smith Age: 32

Survey Date: 2024-01-16 Location: Clinic B

...Rectangular Layout (Correct):

Patient_ID | Name | Age | Survey_Date | Location | Feel_Rating | Pain_Level | Sleep_Quality

-----------|------------|-----|-------------|----------|-------------|------------|-------------

001 | John Doe | 45 | 2024-01-15 | Clinic A | Good | 3 | Fair

002 | Jane Smith | 32 | 2024-01-16 | Clinic B | Excellent | 1 | GoodAvoid Non-Contiguous Data Ranges

Random blank columns, merged cells, and decorative gaps make parsing brittle. Put notes below the table or in another sheet rather than scattering them throughout the data area.

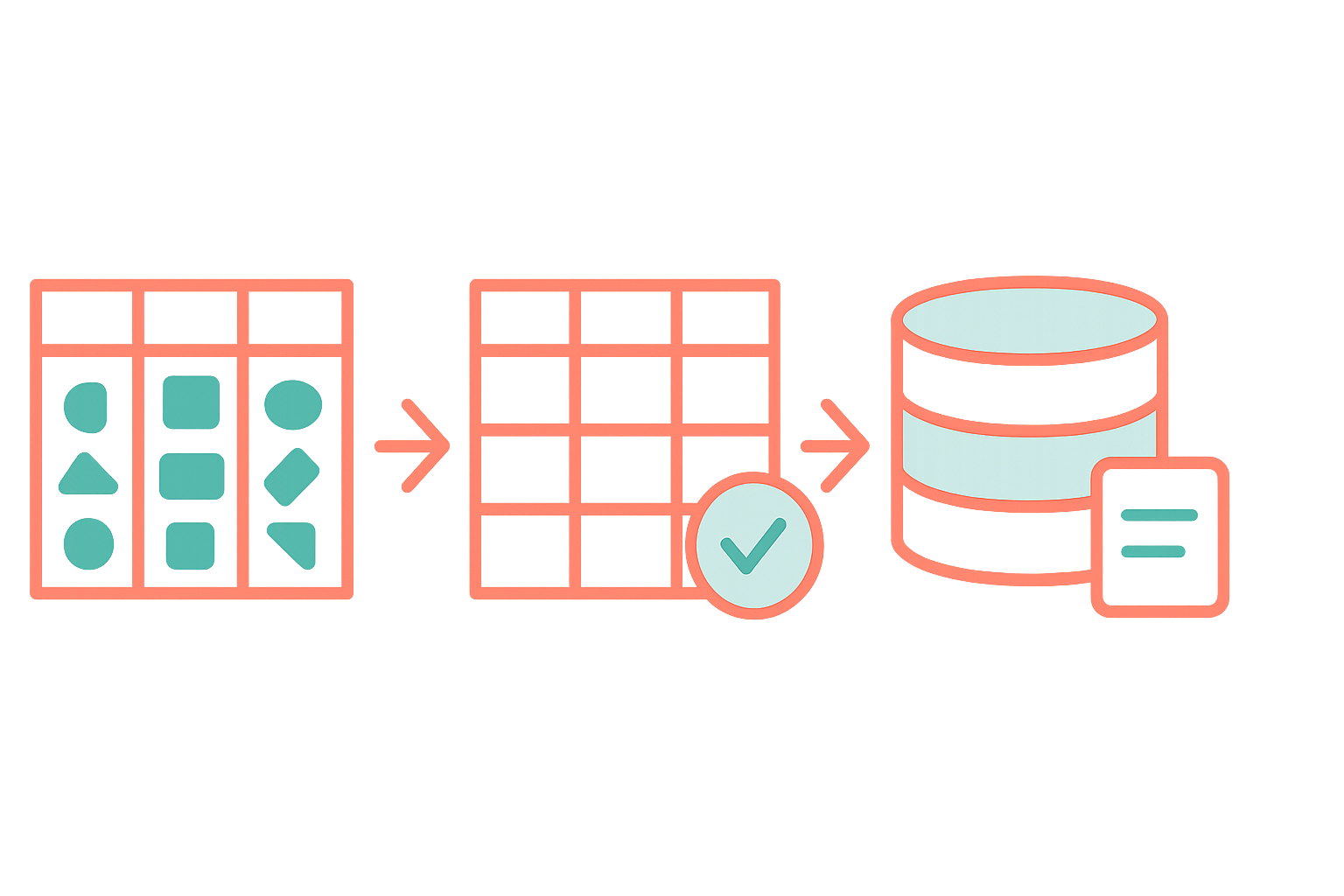

Data Transformation Techniques

Converting Wide Data to Long Format

Sometimes column names actually represent data values rather than variable names.

Wide Format (Needs Conversion):

Country | 2020 | 2021 | 2022

------------|------|------|-----

USA | 100 | 110 | 125

Canada | 50 | 55 | 60Long Format (Correct Structure):

Country | Year | Value

--------|------|------

USA | 2020 | 100

USA | 2021 | 110

USA | 2022 | 125

Canada | 2020 | 50

Canada | 2021 | 55

Canada | 2022 | 60Converting Long Data to Wide Format

Sometimes observations are split across multiple rows when they should be in single rows.

Multiple Rows Per Observation (Needs Conversion):

Country | Metric | Value

--------|------------|------

USA | Population | 330M

USA | GDP | 21T

Canada | Population | 38M

Canada | GDP | 1.7TSingle Row Per Observation (Correct Structure):

Country | Population | GDP

--------|------------|----

USA | 330M | 21T

Canada | 38M | 1.7TStrategic Table Naming Conventions

Table names should clearly communicate their contents. Names should function like descriptive titles that indicate content without requiring examination of the data.

Recommended Naming Pattern

The pattern [Subject][Timeframe][Type] works well for most scenarios:

Examples:

customer_surveys_2024q1(customer survey data from Q1 2024)sales_transactions_daily(daily sales transaction records)employee_salaries_annual(yearly employee salary information)website_traffic_hourly(hourly website analytics data)inventory_levels_realtime(current inventory status)

Content Based Naming Examples

Different data types benefit from specific naming approaches:

Financial Data:

revenue_monthly_usd(monthly revenue in US dollars)expenses_categories_2024(categorized expenses for 2024)budget_vs_actual_quarterly(budget comparison data)

Customer Data:

customers_demographics(customer demographic information)customer_orders_history(historical order data)customer_support_tickets(support request tracking)

Operational Data:

production_metrics_daily(daily production statistics)quality_control_tests(quality assurance test results)shipping_delivery_tracking(package delivery information)

Time Series Data:

temperature_readings_15min(temperature data every 15 minutes)stock_prices_eod(end-of-day stock prices)server_performance_logs(server monitoring data)

Related Table Naming

When multiple tables contain related information, consistent prefixes help organize and clarify relationships:

sales_transactions_2024

sales_returns_2024

sales_customers_2024

sales_products_2024This approach groups related tables together and makes relationships obvious.

Essential Documentation Requirements

Five critical areas require documentation to prevent common confusion and ensure data reliability: sources, methodologies, currencies, dates, and units of measurement.

Data Source Documentation

Every dataset should clearly document its origins and collection context:

Data Source: Customer satisfaction survey via SurveyMonkey

Collection Period: January 1 - March 31, 2024

Sample Size: 1,247 respondents

Response Rate: 23%

Population: Active customers with purchases in past 12 months

Geographic Scope: North American customers only

Known Limitations: Self-reported data, potential response biasKey source elements to document:

- Where the data originated (system, survey, sensor, etc.)

- Who collected it and when

- What population or system it represents

- Any known sampling biases or limitations

- Collection tools or instruments used

Methodology Documentation

Document how data was processed, transformed, or calculated:

Methodology: Customer Lifetime Value Calculation

Formula: (Average Order Value × Purchase Frequency × Customer Lifespan)

Data Processing Steps:

1. Removed duplicate transactions

2. Excluded refunded orders

3. Calculated rolling 12-month averages

4. Applied inflation adjustment using CPI data

Quality Checks: Validated against historical benchmarks

Last Updated: 2024-03-15Essential methodology elements:

- Calculation formulas and algorithms used

- Data cleaning and filtering steps applied

- Statistical methods or transformations performed

- Quality control measures implemented

- Assumptions made during processing

Currency Documentation

Currency information should always include the specific currency and reference date:

Column Name: revenue_amount_usd

Description: Revenue in US Dollars

Currency: USD

Exchange Rate Date: 2024-01-01

Notes: All international sales converted using rates from xe.comMulti-currency datasets require additional columns:

revenue_amount | currency_code | exchange_rate | base_currency

---------------|---------------|---------------|-------------

1000.00 | USD | 1.00 | USD

850.00 | EUR | 1.18 | USD

120000.00 | JPY | 0.0091 | USDDate Format Documentation

Date formats should be clearly specified:

- Format used: YYYY-MM-DD (ISO 8601 standard)

- Time zone: UTC (or specify local time zone)

- Missing dates: Coding method (e.g., “1900-01-01” for unknown dates)

Common date scenarios requiring documentation:

order_date: Date customer placed order (YYYY-MM-DD, UTC)

ship_date: Date order was shipped (YYYY-MM-DD, local warehouse time)

delivery_date: Date customer received order (YYYY-MM-DD, customer local time)Examples should be included in documentation:

order_dateexamples: 2024-03-15, 2024-12-01delivery_timeexamples: 14:30, 09:15 (24-hour format)

Units of Measurement Documentation

All numeric values require unit specification.

Physical measurements:

weight_kg: Weight in kilograms (0.1 kg precision)

temperature_celsius: Temperature in Celsius (-40 to +60 range)

distance_miles: Distance in miles (rounded to nearest 0.1)Business metrics:

response_time_ms: Server response time in milliseconds

conversion_rate_pct: Conversion rate as percentage (0-100)

satisfaction_score: 1-5 scale (1=very dissatisfied, 5=very satisfied)Financial units:

price_per_unit_usd: Price per unit in US dollars (2 decimal places)

discount_percentage: Discount as percentage of original price (0-100)

tax_rate_basis_points: Tax rate in basis points (1 bp = 0.01%)Quick Reference Documentation Template

A simple reference table can prevent most confusion:

Data Type | Format | Example | Notes

----------|--------|---------|------

Dates | YYYY-MM-DD | 2024-03-15 | ISO 8601, UTC

Currency | USD | 1234.56 | 2 decimal places

Percentages | Decimal | 0.15 | 15% stored as 0.15

Phone | +1-555-123-4567 | US format | Include country code

Email | lowercase | [email protected] | All lowercaseFile Storage and Backup Strategies

File Format Selection

Different file formats offer varying levels of reliability for long-term storage:

- CSV files (comma-separated values) - Most reliable for long-term storage

- TSV files (tab-separated values) - Good alternative when data contains commas

- Excel files - Acceptable for working files, but CSV backups are recommended

- Proprietary formats - Should be avoided unless absolutely necessary

CSV file advantages:

- Compatible with all software platforms

- Maintain integrity over time

- Cannot be corrupted by formatting features

- Minimal file size requirements

Backup System Implementation

Effective backup strategies should include:

- The 3-2-1 rule: 3 copies, 2 different storage types, 1 offsite location

- Version control: Use numbered versions (

dataset_v1.csv,dataset_v2.csv) - Write protection: Protect original files from accidental modification

- Automated backups: Reduce reliance on manual backup processes

File copying with date stamps should be standard practice before making significant changes.

Data Validation Techniques

Dropdown List Implementation

Dropdown lists prevent typing errors in categorical variables. Instead of allowing free text entry for fields like gender, predefined options eliminate inconsistencies.

Numeric Range Validation

Range validation catches obvious entry errors. Age fields should only accept values between reasonable limits (0-120), preventing errors like “245” when “24” was intended.

Date Format Enforcement

Date columns should be restricted to specific formats. This prevents automatic conversion issues where text like “OCT4” becomes interpreted as “October 4th” instead of remaining as a gene identifier.

Common Issues and Solutions

The Spreadsheet Problem: Why Excel Files Are Dangerous

While spreadsheets appear similar to CSV files, they create unique data integrity problems that make them unsuitable for reliable data storage:

The Multiple Tables Problem: One of the most common issues with Excel files is cramming multiple related tables into a single sheet. This creates several critical problems:

- Analysis tools cannot distinguish where one table ends and another begins

- Column headers become ambiguous when tables share the same sheet

- Data relationships become unclear without proper separation

- Import processes often fail or produce corrupted results

For example, instead of one sheet with customer data, order data, and product data mixed together, create:

customers_master_2024.csvorders_transactions_2024.csvproducts_catalog_2024.csvdata_relationships_guide.txt(explaining how customer_id links tables)

Example of Hidden Corruption: A value might display as “1019” but actually be stored as “1019.5” due to automatic formatting. When exported to other formats, this hidden decimal appears and breaks integer assumptions.

Visual Formatting Problems

Color coding and highlighting cannot be processed programmatically and disappear during file conversion. This creates a major “formatting-as-data” problem.

The core issue: Color fills, bold text, and comments don’t travel between systems. If a value is “estimated” or “flagged,” add a boolean or a status column instead.

Don’t rely on visual formatting:

- Colors won’t survive export to CSV or database import

- Bold text disappears during data processing

- Cell comments are lost in most analysis tools

- Conditional formatting rules don’t transfer to other systems

Use explicit data columns instead: Status information should be stored in dedicated columns with values like “High Priority”, “Normal”, “Complete”, “Estimated”, or “Verified”. Machines can read that data reliably.

Computational Opacity Problems

Calculations should never be included in raw data files. Raw data should remain pristine, with all calculations performed in separate analysis files to prevent accidental data corruption.

In 2012, JP Morgan’s “London Whale” loss (over $6 billion) stemmed from a single Excel formula error in a risk model, which understated risk and encouraged reckless trades. Because the spreadsheet mixed data, calculations, and reporting in one place, the mistake went unchecked. Separating data from calculations prevents these catastrophic errors.

Getting Started with Cleaner Data

RepoTEN automatically enforces the data organization principles outlined in this guide. Teams can save datasets with proper naming conventions, documentation, and structure without manual effort. The system handles tidy data requirements, version control, and backup protocols by default. This eliminates the need for teams to remember complex procedures or develop their own data management standards.

Data organization practices require ongoing attention and consistent application. The time invested in proper structure and documentation reduces long-term analysis complexity and improves data reliability across the organization.